Keynote speakers

| Speaker | Keynote |

|---|---|

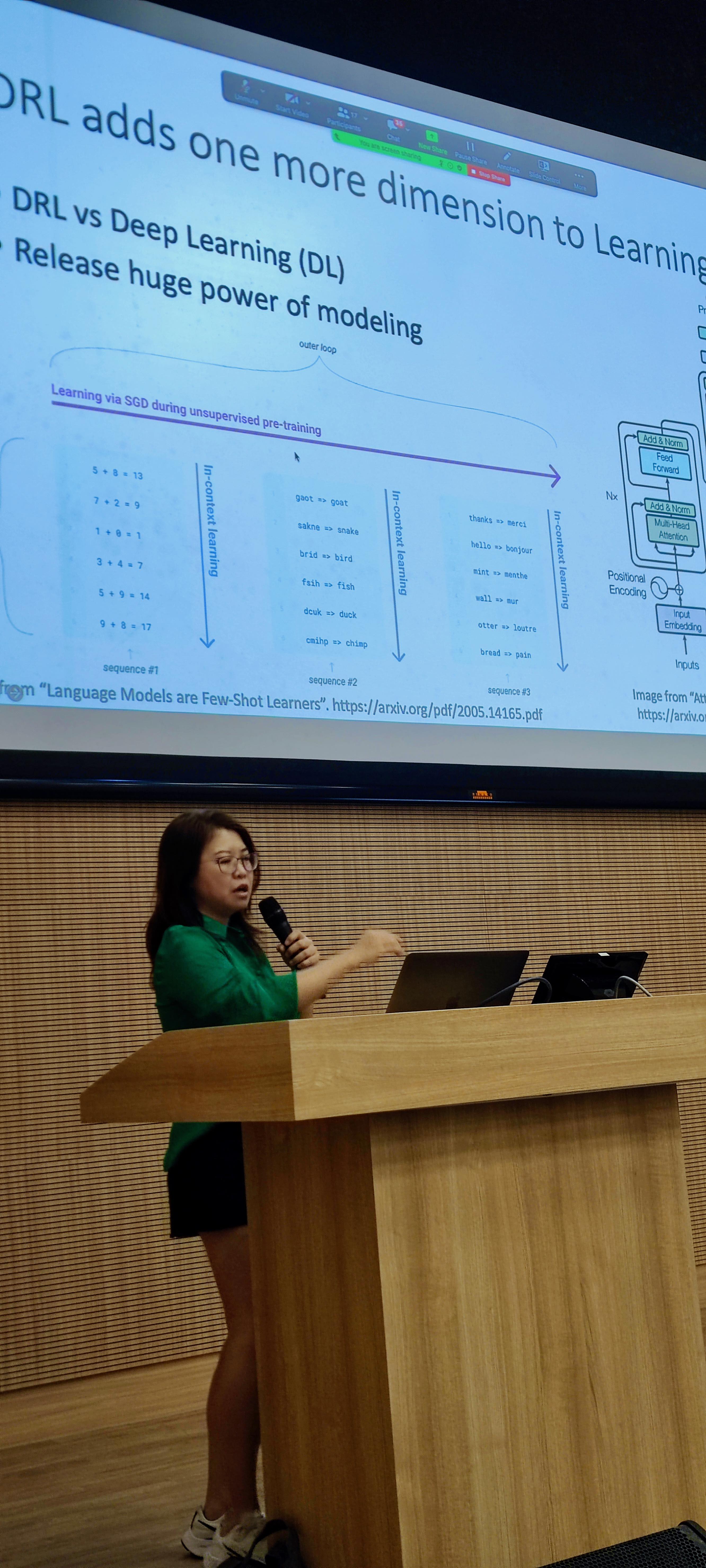

| Grace Hui Yang

Dr. Grace Hui Yang is an Associate Professor in the Department of Computer Science at Georgetown University. Dr. Yang is leading the InfoSense (Information Retrieval and Sense-Making) group at Georgetown University, Washington D.C. Dr. Yang obtained her Ph.D. from Carnegie Mellon University in 2011. Her current research interests include deep reinforcement learning, interactive agents, and human-centered AI. Prior to this, she conducted research on question answering, automatic ontology construction, near-duplicate detection, multimedia information retrieval, and opinion and sentiment detection. Dr. Yang's research has been supported by the Defense Advanced Research Projects Agency (DARPA) and the National Science Foundation (NSF). Dr. Yang co-organized the Text Retrieval Conference (TREC) Dynamic Domain Track from 2015 to 2017 and led the effort for SIGIR privacy-preserving information retrieval workshops from 2014 to 2016. Dr. Yang has served on the editorial boards of ACM TOIS and Information Retrieval Journal (from 2014 to 2017) and has actively served as an organizing or program committee member in many conferences such as SIGIR, ECIR, ACL, AAAI, ICTIR, CIKM, WSDM, and WWW. She is a recipient of the NSF Faculty Early Career Development Program (CAREER) Award.

|

High-Quality Diversification for Task-Oriented Dialogue Systems Many task-oriented dialogue systems use deep reinforcement learning (DRL) to learn policies that respond to the user appropriately and complete the tasks successfully. Training DRL agents with diverse dialogue trajectories prepare them well for rare user requests and unseen situations. One effective diversification method is to let the agent interact with a diverse set of learned user models. However, trajectories created by these artificial user models may contain generation errors, which can quickly propagate into the agent’s policy. It is thus important to control the quality of the diversification and resist the noise. In this paper, we propose a novel dialogue diversification method for task-oriented dialogue systems trained in simulators. Our method, Intermittent Short Extension Ensemble (I-SEE), constrains the intensity to interact with an ensemble of diverse user models and effectively controls the quality of the diversification. Evaluations show that I-SEE successfully boosts the performance of several DRL dialogue agents. |

| Chirag Shah

Dr. Chirag Shah is a Professor in Information School, an Adjunct Professor in Paul G. Allen School of Computer Science & Engineering, and an Adjunct Professor in Human Centered Design & Engineering (HCDE) at University of Washington (UW). He is the Founding Director of InfoSeeking Lab and the Founding Co-Director of RAISE, a Center for Responsible AI. His research revolves around intelligent systems. He received his PhD in Information Science from University of North Carolina (UNC) at Chapel Hill.

|

What does Fairness in Information Access Mean and Can We Achieve It? Bias in data as well as lack of transparency and fairness in algorithms are not new problems, but with the increasing scale, complexity, and adoption, most AI systems are suffering from these issues at a level unprecedented. Information access systems are not spared since these days, almost all large-scale systems of information access are mediated by algorithms. These algorithms are optimized not only for relevance, which is subjective to begin with, but also for measures of engagement and impressions. They are picking up signals of what may be 'good' from individuals and perpetuating that through learning methods that are opaque and hard to debug. Considering 'fairness' and introducing more transparency can help, but it can also backfire or create other issues. We also need to understand how and why users of these systems engage with content. In this talk, I will share some of our attempts for bringing fairness in ranking systems and then talk about how the solutions are not that simple. |

| Chenliang Li

Dr. Chenliang Li is a Professor at School of Cyber Science and Engineering, Wuhan University. His research interests inlcude information retrieval, natural language processing and social media analysis. He has published over 90 research papers on leading academic conferences and journals such as SIGIR, ACL, WWW, IJCAI, AAAI, TKDE and TOIS. He has served as Associate Editor / Editorial Board Member for ACM TOIS, ACM TALLIP, IPM and JASIST. His research won the SIGIR 2016 Best Student Paper Honorable Mention and TKDE Featured Spotlight Paper.

|

Recent Advances in Candidate Matching The candidate matching, i.e., retrieving potentially relevant candidate items to users for further ranking, is highly important since even the cleverest housewife cannot bake bread without flour. While many research efforts are devoted to the ranking stage, few works focus on the former one where the space for more sophisticated models seems to be limited due to the requirement of low computation cost, especially for an industrial system. No matter which scenario, the matching stage is highly important since even the cleverest housewife cannot bake bread without flour. In this talk, we will introduce the background knowledge towards this task at first. Then, an overview on the recent efforts of this line will be presented. At last, we will discuss the possible directions in the near future. |

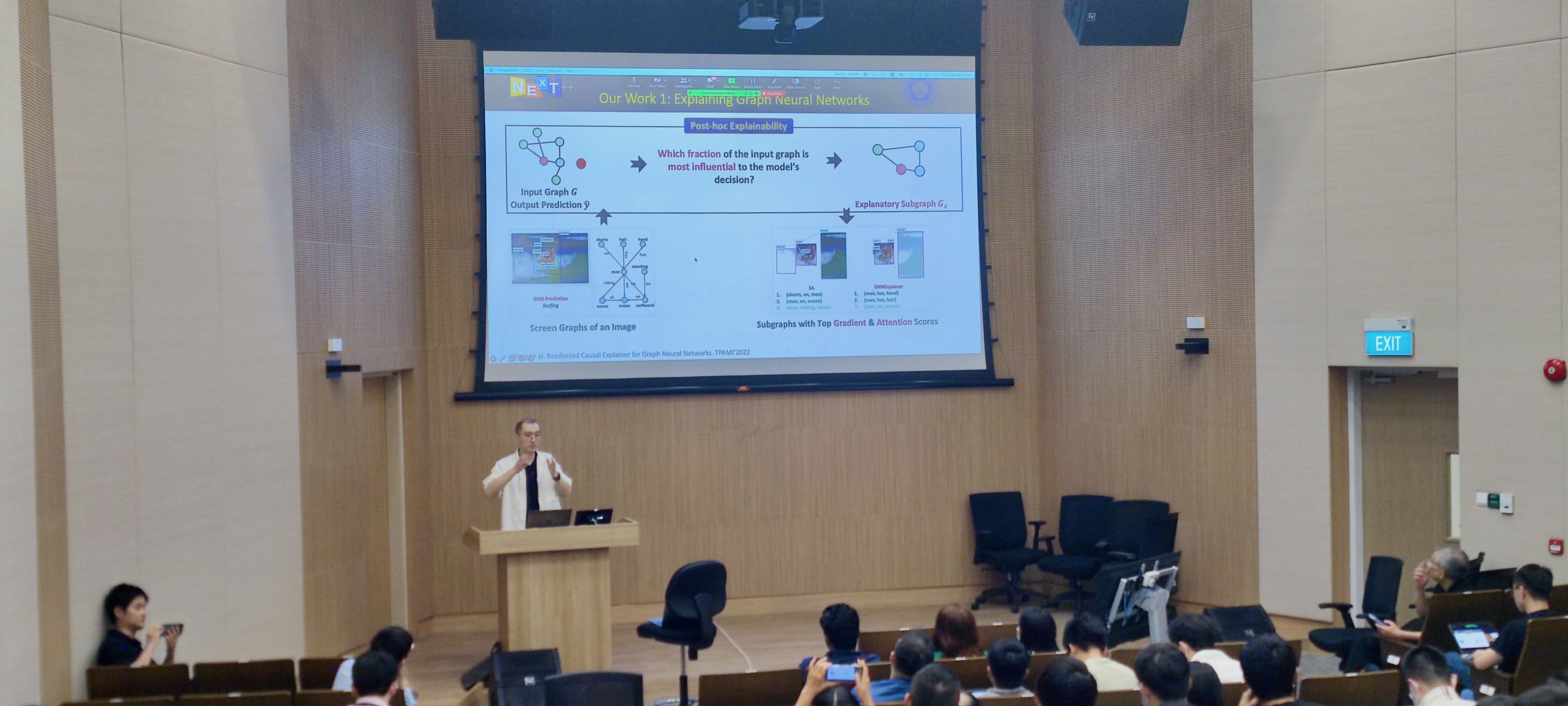

| Xiang Wang

Dr. Wang Xiang is a Professor in University of Science and Technology of China, where he is a member of Lab of Data Science. With his colleagues, students, and collaborators, he strives to develop trustworthy deep learning and artificial intelligence algorithms with better interpretability, generalization, and robustness. His research is motivated by, and contributes to, graph-structured applications in information retrieval (e.g., personalized recommendation), data mining (e.g., graph pre-training), security (e.g., fraud detection in fintech, information security in system), and multimedia (e.g., video question answering). His work has over 50 publications in top-tier conferences and journals. Over 10 papers have been featured in the most cited and influential list (e.g., KDD 2019, SIGIR 2019, SIGIR 2020, SIGIR 2021) and best paper finalist (e.g., WWW 2021, CVPR 2022). Moreover, He has served as the PC member for top-tier conferences including NeurIPS, ICLR, SIGIR and KDD, and the invited reviewer for prestigious journals including JMLR, TKDE, and TOIS.

|

Explainability of Graph Neural Networks Graph Neural Networks (GNNs) are powerful models to exploit the high-order relationship between entities on graphs. Despite the superior performance, we have little knowledge about the explainability of GNNs. In this talk, we will introduce two themes of explainability, (1) Post-hoc explainability: Using an additional explainer method to explain a black-box model post hoc, but explanations could be unfaithful to the decision-making process of a model; (2) Intrinsic Interpretability: Incorporating a rationalization module into the model design, so as to transform a black-box to a white-box. We find causal theory is one promising solution and we will discuss interpretability and generalization. |