Keynote Speakers

The following speakers have accepted to give keynotes at SSNLP 2019. You can view the detailed information by clicking the images.

Speaker: Rada Mihalcea

Abstract: Words are the central units of the languages we all speak. In a similar way, word representations are the core units of the algorithms that we build to automatically process language, and they form the foundation for many applications of natural language processing, ranging from question answering systems, to chat bots, search engines, or systems for machine translation. In this talk, I will take a deep dive into word embeddings, and address two aspects of word embeddings. First, I will take a close look at their stability, and show that even relatively high frequency words are often unstable. I will provide empirical evidence for how various factors contribute to the stability of word embeddings, and analyze the effects of stability on downstream tasks. Second, I will explore the relation between words and people and show how we can develop cross-cultural word representations to identify words with cultural influence, and also how we can effectively use information about the people behind the words to build better word representations. This is joint work with Laura Burdick, Aparna Garimella, Carmen Banea.

Bio: Rada Mihalcea is a Professor of Computer Science and Engineering at the University of Michigan and the Director of the Michigan Artificial Intelligence Lab. Her research interests are in computational linguistics, with a focus on lexical semantics, multilingual natural language processing, and computational social sciences. She serves or has served on the editorial boards of the Journals of Computational Linguistics, Language Resources and Evaluations, Natural Language Engineering, Journal of Artificial Intelligence Research, IEEE Transactions on Affective Computing, and Transactions of the Association for Computational Linguistics. She was a program co-chair for EMNLP 2009 and ACL 2011, and a general chair for NAACL 2015 and *SEM 2019. She currently serves as the ACL Vice-President Elect. She is the recipient of a National Science Foundation CAREER award (2008) and a Presidential Early Career Award for Scientists and Engineers awarded by President Obama (2009). In 2013, she was made an honorary citizen of her hometown of Cluj-Napoca, Romania.

Speaker: Eduard Hovy

Abstract: In modern automated QA research, what differentiates simple/shallow from complex/deep QA? Early QA research developed pattern-learning and -matching techniques to identify the appropriate factoid answer(s), and this approach has been taken a step further recently by recent neural architectures that learn and apply more-flexible generalized word/type-sequence ‘patterns’. However, many QA tasks require some sort of intermediate reasoning or other inference procedures more complex than generalization over pattern-anchored words and phrases. In one approach, people focus on the automated construction of small access functions to locate the answer in structured resources like tables or databases. But much (or most) knowledge is not structured, and what to do in this case is unclear. Most current ‘deep’ QA approaches take a one-size-fits-all approach in which they essentially hope that a multi-layer neural architecture will somehow learn to encode inference steps automatically. The main problem facing this line of research is the difficulty in defining exactly what kinds of reasoning are relevant, and what knowledge resources are required to support them. If all relevant knowledge is apparent on the surface of the question material, then shallow pattern-matching techniques (perhaps involving combinations of patterns) can surely be developed using simple/shallow methods. But if not, then (at least some of) the relevant knowledge is either internal to the QA system or resides in some additional, external resource (like the web), which makes the design and construction of general comprehensive datasets and evaluations very difficult. (In fact, the same problem faces all in-depth semantic analysis research topics, including entailment, machine reading, semantic information extraction, and more.) How should the Complex-QA community respond to this conundrum? In this talk I outline the problem, define four levels of QA, and propose a general direction for future research.

Bio: Eduard Hovy is a research full professor at the Language Technologies Institute in the School of Computer Science at Carnegie Mellon University. He also holds adjunct professorships in CMU’s Machine Learning Department and at USC (Los Angeles) and BUPT (China). Dr. Hovy completed a Ph.D. in Computer Science (Artificial Intelligence) at Yale University in 1987, and was awarded honorary doctorates from the National Distance Education University (UNED) in Madrid in 2013 and the University of Antwerp in 2015. He is one of the initial 17 Fellows of the Association for Computational Linguistics (ACL) and also a Fellow of the Association for the Advancement of Artificial Intelligence (AAAI). Dr. Hovy’s research focuses on computational semantics of language, and addresses various areas in Natural Language Processing and Data Analytics, including in-depth machine reading of text, information extraction, automated text summarization, question answering, the semi-automated construction of large lexicons and ontologies, and machine translation. In early 2019 his Google h-index was 77, with over 27,000 citations. Dr. Hovy is the author or co-editor of six books and over 400 technical articles and is a popular invited speaker. From 2003 to 2015 he was co-Director of Research for the Department of Homeland Security’s Center of Excellence for Command, Control, and Interoperability Data Analytics, a distributed cooperation of 17 universities. In 2001 Dr. Hovy served as President of the international Association of Computational Linguistics (ACL), in 2001–03 as President of the International Association of Machine Translation (IAMT), and in 2010–11 as President of the Digital Government Society (DGS). Dr. Hovy regularly co-teaches Ph.D.-level courses and has served on Advisory and Review Boards for both research institutes and funding organizations in Germany, Italy, Netherlands, Ireland, Singapore, and the USA.

Speaker: Heng Ji

Abstract: The identification of complex semantic graph structures such as events and entity relations from unstructured texts, already a challenging Information Extraction task, is doubly difficult to extract from sources written in under-resourced and under-annotated languages. We investigate the suitability of cross-lingual cross-media graph structure transfer techniques for these tasks. Previous efforts on cross-lingual transfer are limited to sequence level. In contrast, we observe that relational facts are typically expressed by identifiable structured graph patterns across multiple languages and data modalities. We exploit relation- and event-relevant language-universal and modality-universal features, leveraging both symbolic (including part-of-speech and dependency path) and distributional (including type representation and contextualized representation) information. We then represent all entity mentions, event triggers, and contexts into this complex and structured multilingual common space, using graph convolutional networks. In this way all the sentences from multiple languages, along with visual objects from images are represented as one shared unified graph representation. We then train a relation or event extractor from source language annotations and apply it to the target language and images. Extensive experiments on cross-lingual and cross-media relation and event transfer demonstrate that our approach achieves performance comparable to state-of-the-art supervised models trained on up to 3,000 manually annotated mentions, and dramatically outperforms methods learned from flat representation. I will show a preliminary demo on applying the resultant event knowledge base for automatic history book generation.

Bio: Heng Ji is a professor at Computer Science Department of University of Illinois at Urbana-Champaign. She received her B.A. and M. A. in Computational Linguistics from Tsinghua University, and her M.S. and Ph.D. in Computer Science from New York University. Her research interests focus on Natural Language Processing, especially on Information Extraction and Knowledge Base Population. She is selected as "Young Scientist" and a member of the Global Future Council on the Future of Computing by the World Economic Forum in 2016 and 2017. The awards she received include "AI's 10 to Watch" Award by IEEE Intelligent Systems in 2013 and NSF CAREER award in 2009. She has coordinated the NIST TAC Knowledge Base Population task since 2010. She is the associate editor for IEEE/ACM Transaction on Audio, Speech, and Language Processing, and served as the Program Committee Co-Chair of many conferences including NAACL-HLT2018.

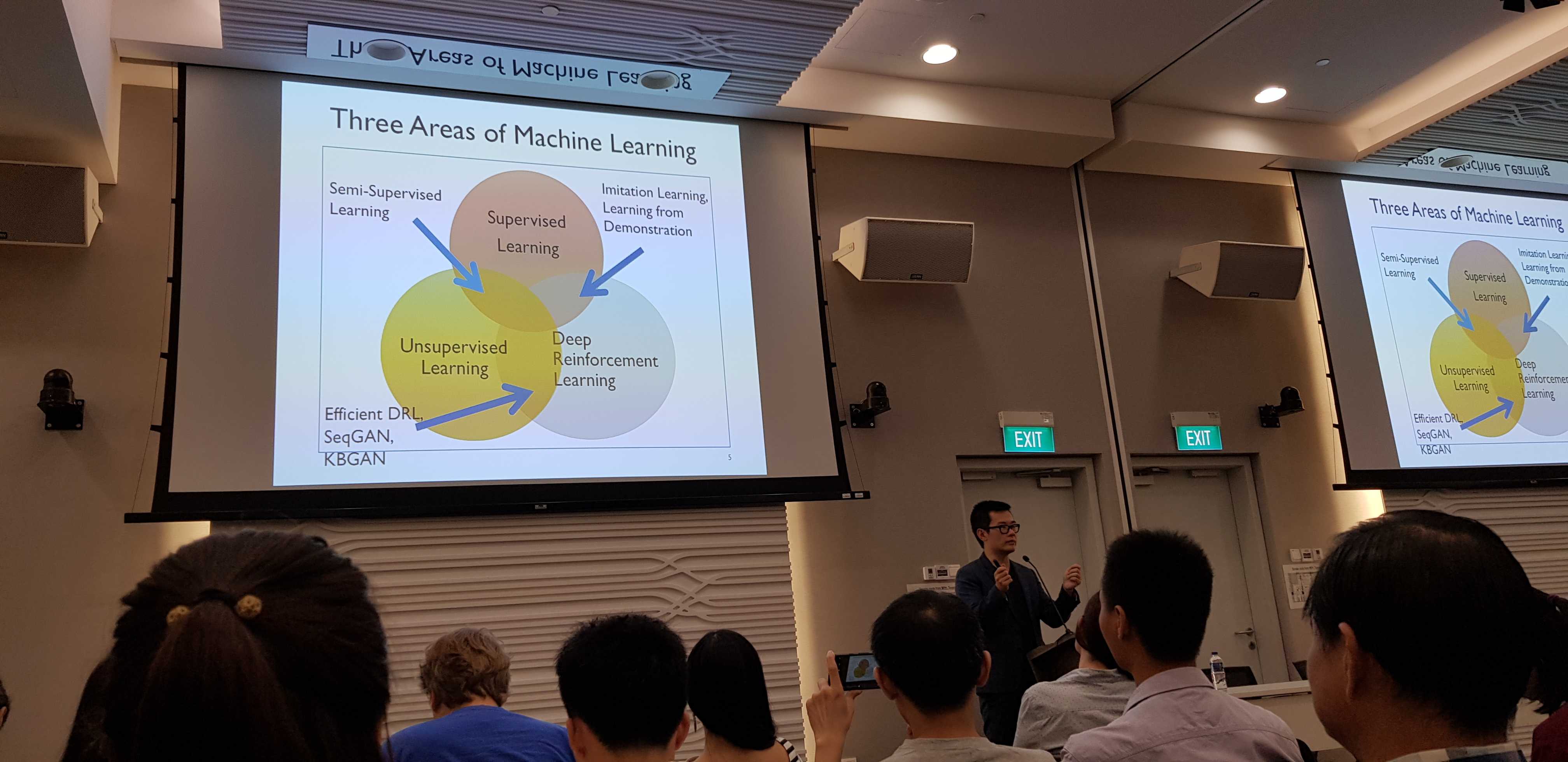

Speaker: William Wang

Abstract: With the vast amount of language data available in digital form, now is a good opportunity to move beyond traditional supervised learning methods. The core research question that I will address in this talk is the following: What is self-supervised learning? What is the success story behind BERT and XLNet? How can we design self-supervised deep learning methods to operate over rich language and knowledge representations? In this talk, I will describe some examples of my work in advancing the state-of-the-arts in methods of self-supervised learning for NLP, including: 1) AREL, a self-adaptive inverse reinforcement learning agent for visual storytelling; and 2) Self-supervised learning that goes beyond surface-level representation learning. I will conclude this talk by describing my other research interests in the interdisciplinary field of AI/ML/NLP/Vision.

Bio: William Wang is an Assistant Professor in the Department of Computer Science at the University of California, Santa Barbara. He is the Director of UCSB's Responsible Machine Learning Center. He received his PhD from School of Computer Science, Carnegie Mellon University in 2016. He has broad interests in machine learning approaches to data science, including natural language processing, statistical relational learning, information extraction, computational social science, dialogue, and vision. He directs UCSB’s NLP Group (nlp.cs.ucsb.edu): in two years, UCSB advanced in the NLP area from an undefined ranking position to top 3 in 2018 according to CSRankings.org. He has published more than 80 papers at leading NLP/AI/ML conferences and journals, and received best paper awards (or nominations) at ASRU 2013, CIKM 2013, EMNLP 2015, and CVPR 2019, a DARPA Young Faculty Award (Class of 2018), a Google Faculty Research Award (2018), two IBM Faculty Awards in 2017 and 2018, a Facebook Research Award in 2018, an Adobe Research Award in 2018, and the Richard King Mellon Presidential Fellowship in 2011. He frequently serves as an Area Chair for NAACL, ACL, EMNLP, and AAAI. He is an alumnus of Columbia University, Yahoo! Labs, Microsoft Research Redmond, and University of Southern California. In addition to research, William enjoys writing scientific articles that influence the broader online community: his microblog Weibo has 116,000+ followers and more than 2,000,000 views each month. His work and opinions frequently appear at major international media outlets such as Wired, VICE, Fast Company, NASDAQ, Scientific American, The Next Web, The Brookings Institution, Law.com, and Mental Floss.

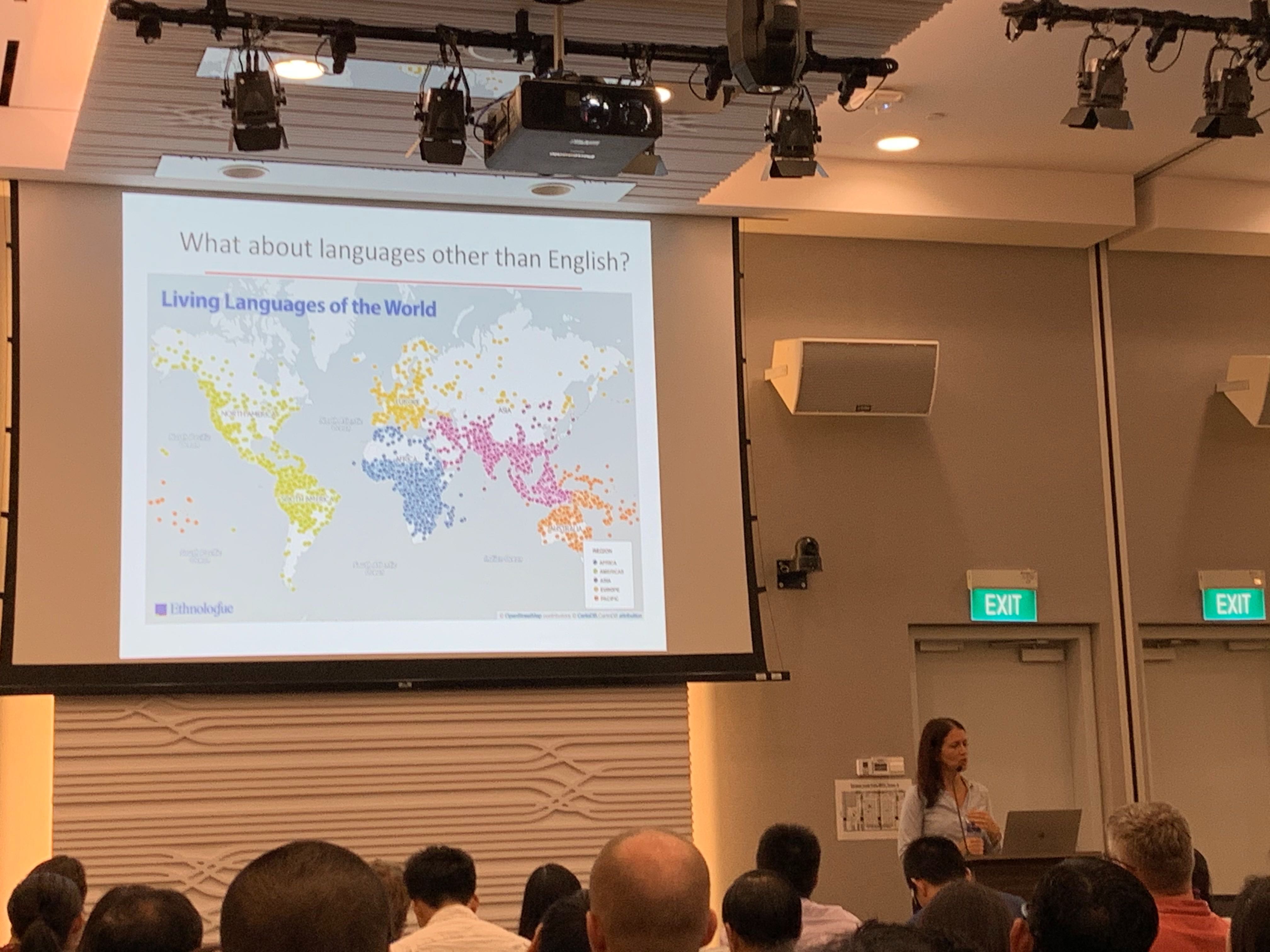

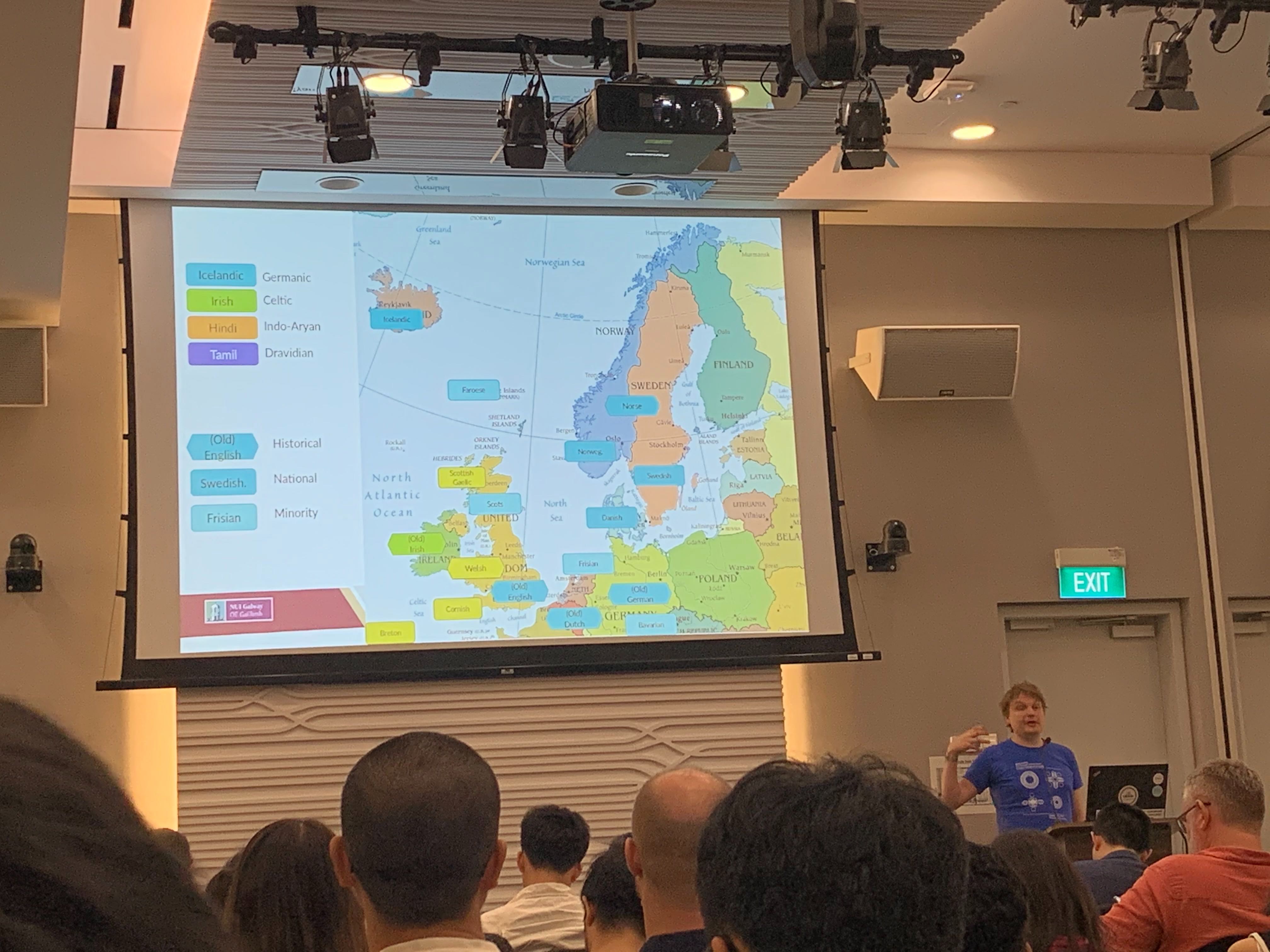

Speaker: John McCrae

Abstract: NLP is moving increasingly from working on only a few well-resourced languages to meet the challenges of a globalising world connected through the internet. As such, connecting all these languages is of increasing importance and new technologies to link across language boundaries are necessary. I will present some results from the ELEXIS (European Lexicographic Infrastructure) project, which aims to connect lexicographers with NLP researchers across Europe and link their dictionaries through a new dictionary matrix. Secondly, I will talk about the Cardamom project, which aims to extend NLP technology to minority languages and also historical languages by exploiting information from well-resourced closely-related languages.

Bio: John McCrae is a lecturer above-the-bar at the Data Science Institute and Insight Centre for Data Analytics at the National University of Ireland Galway and the leader of the Unit for Linguistic Data. He is the coordinator of the Prêt-à-LLOD project and work package leader in the ELEXIS infrastructure. His research interests span Machine learning methods for NLP, Digital Humanities, Machine translation and multilingualism, etc. He obtained his PhD from the National Institute of Informatics in Tokyo under the supervision of Nigel Collier and until 2015 he was a post-doctoral researcher at the University of Bielefeld in Bielefeld, Germany in Prof. Philipp Cimiano's group, AG Semantic Computing.

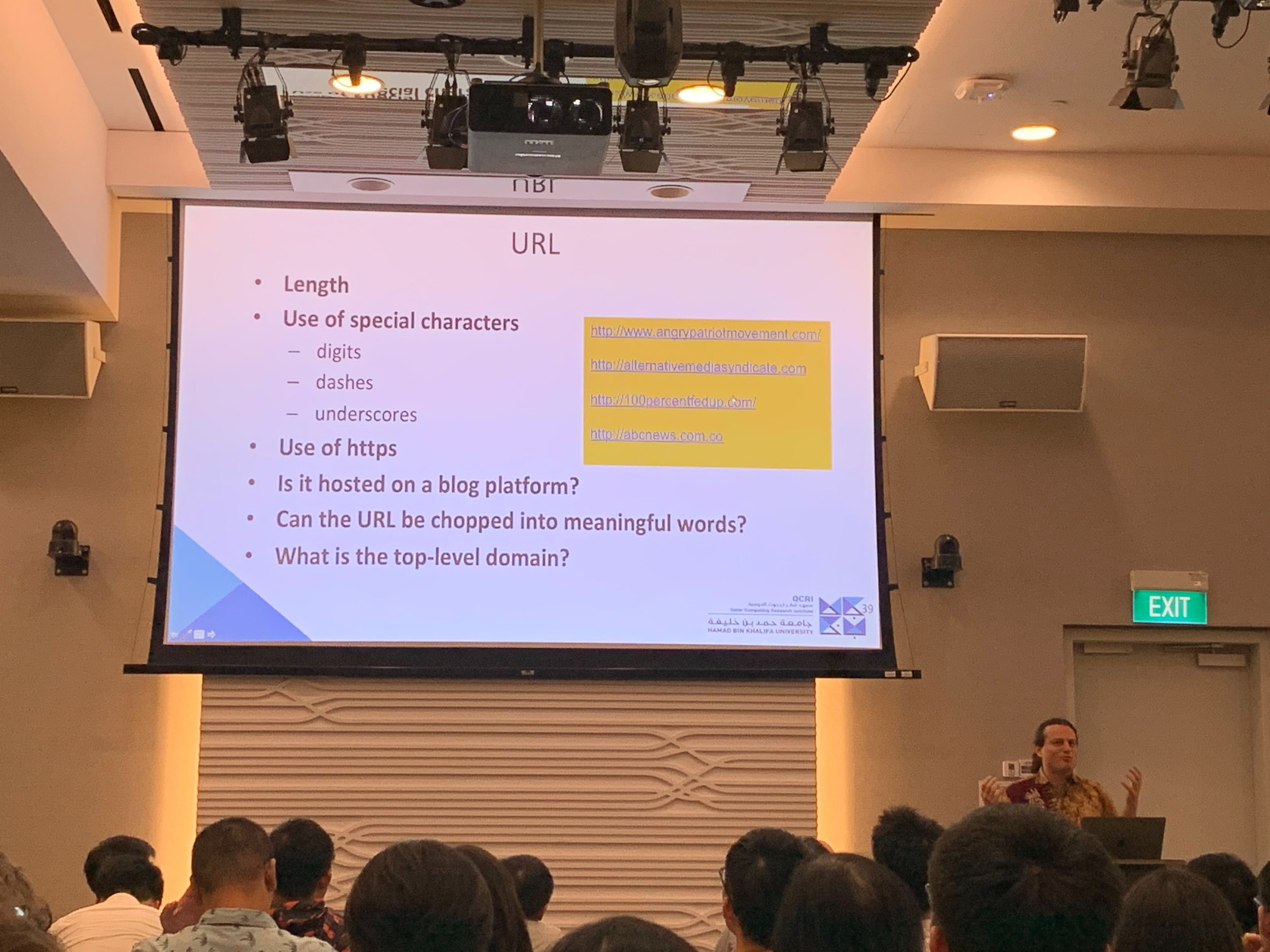

Speaker: Preslav Nakov

Abstract: Given the recent proliferation of disinformation online, there has been also growing research interest in automatically debunking rumors, false claims, and "fake news". A number of fact-checking initiatives have been launched so far, both manual and automatic, but the whole enterprise remains in a state of crisis: by the time a claim is finally fact-checked, it could have reached millions of users, and the harm caused could hardly be undone. An arguably more promising direction is to focus on fact-checking entire news outlets, which can be done in advance. Then, we could fact-check the news before they were even written: by checking how trustworthy the outlets that published them are. We will show how we do this in the Tanbih news aggregator (http://www.tanbih.org/), which makes users aware of what they are reading. In particular, we develop media profiles that show the general factuality of reporting, the degree of propagandistic content, hyper-partisanship, leading political ideology, general frame of reporting, stance with respect to various claims and topics, as well as audience reach and audience bias in social media.

Bio: Dr. Preslav Nakov is a Principal Scientist at the Qatar Computing Research Institute (QCRI), HBKU. His research interests include computational linguistics, "fake news" detection, fact-checking, machine translation, question answering, sentiment analysis, lexical semantics, Web as a corpus, and biomedical text processing. He received his PhD degree from the University of California at Berkeley (supported by a Fulbright grant), and he was a Research Fellow in the National University of Singapore, a honorary lecturer in the Sofia University, and research staff at the Bulgarian Academy of Sciences. At QCRI, he leads the Tanbih project (http://tanbih.qcri.org), developed in collaboration with MIT, which aims to limit the effect of "fake news", propaganda and media bias by making users aware of what they are reading. Dr. Nakov is the Secretary of ACL SIGLEX and of ACL SIGSLAV, and a member of the EACL advisory board. He is member of the editorial board of TACL, C&SL, NLE, AI Communications, and Frontiers in AI. He is also on the Editorial Board of the Language Science Press Book Series on Phraseology and Multiword Expressions. He co-authored a Morgan & Claypool book on Semantic Relations between Nominals, two books on computer algorithms, and many research papers in top-tier conferences and journals. He also received the Young Researcher Award at RANLP'2011. He was also the first to receive the Bulgarian President's John Atanasoff award, named after the inventor of the first automatic electronic digital computer. Dr. Nakov's research was featured by over 100 news outlets, including Forbes, Boston Globe, Aljazeera, MIT Technology Review, Science Daily, Popular Science, Fast Company, The Register, WIRED, and Engadget, among others.

Panel Discussions: Ethics in AI

The following speakers have accepted to serve as panelists for the panel discussion at SSNLP 2019. You can view their detailed information by clicking the images. Eduard Hovy and other academic speakers will also be discussants on the panel (TBC).

Bio: Liling Tan is a Research Scientist at at Rakuten Institute of Technology Singapore. Currently, he works on machine translation and language learning technologies. Previously, he was an early stage researcher in Saarland University working on dictionaries, ontologies and machine translation. Before that, he studied at NTU working on crosslingual semantics and multilingual corpora.

Bio: Shafiq Joty is an Assistant professor at the School of Computer Science and Engineering, NTU. He is also a senior research manager at Salesforce Research. He holds a PhD in Computer Science from the University of British Columbia. His work has primarily focused on developing language analysis tools (e.g., parsers, NER, coherence models), and exploiting these tools effectively in downstream applications for question answering, machine translation, image/video captioning and visual question answering. He was an area chair for ACL-2019 and EMNLP-2019. He gave tutorials on ``Discourse Analysis and Its Applications" at ACL-2019 and at ICDM-2018.

Bio: Guillermo Infante is the Chief Technology Officer of TAIGER, a global Artificial Intelligence company headquartered in Singapore with offices in five other countries across Europe, America and Asia-Pacific. He leads the technology team to develop cognitive solutions that help organisations to optimise operational efficiencies. With over a decade in the computer science and software engineering fields, Guillermo drives research and development projects with Artificial Intelligence and Semantic Technologies. Prior to join TAIGER, he worked as a professor at leading universities of Spain and Latin America. He was also a researcher in the field of Semantics & AI and his work contributed to expand the knowledge base of several technology systems and industries. His career interest is mainly on AI, Natural Language Processing and Understanding, as well as Knowledge Representation and Reasoning. His work has been published in multiple peer- reviewed international journals. Guillermo holds a PhD in Computer Science and a MSc degree in Web Engineering from the University of Oviedo (Spain), and an executive MSc degree in Innovation Management from the EOI Business School (Madrid, Spain).

Organizers

| PC Chair: |

Kokil Jaidka, Nanyang Technological University Soujanya Poria, Singapore University of Technology and Design |

| General Chair: | Min-Yen Kan, National University of Singapore |

| Co-organizers: |

Ai Ti Aw, Institute for Infocomm Research Francis Bond, Nanyang Technological University Nancy Chen, Institute for Infocomm Research Jing Jiang, Singapore Management University Shafiq Joty, Nanyang Technological University Hai zhou Li, National University of Singapore Wei Lu, Singapore University of Technology and Design Hwee Tou Ng, National University of Singapore Jian Su, Institute for Infocomm Research |

Photos

Poster

Report

Registration

Location

SSNLP 2019 will be held at MPH1, level 1, Innovis, 2 Fusionopolis Way, Singapore 138634.Past SSNLP

SSNLP 2018Contact us

Contact email: If you have any enquiries, please drop an email to Kokil Jaidka or Soujanya Poria.